Model deployment patterns: hands-on dynamic deployment using multi-containers [3/4]

Continuing our series on model deployment patterns, the previous post covered dynamic deployment and its various implementations—whether on a virtual machine, in a container, or using a serverless approach.

In this post, we’ll explore a practical example of dynamic deployment, examining each component and reviewing the results.

There are several approaches to building a dynamic deployment architecture, but we’ll be using the following technologies:

- Docker

- Docker Compose

- FastAPI

- Nginx

- Scikit-learn

For learning purposes, let’s use the fetch_20newsgroups dataset from sklearn.datasets. This dataset contains approximately 18,000 newsgroup documents organized into 20 different categories and is commonly used for text classification tasks, such as topic classification.

We’ll use it to train a classifier to predict the category of a given text based on the 20 available classes.

To keep the focus on deployment, we’ll omit the model evaluation step.

0. Installing dependencies

For this project, we’ll need to install the following packages:

- pandas

- fastapi

- uvicorn

- scikit-learn

- numpy

- joblib

Step 1. Creating a deployable model

Using the fetch_20newsgroups dataset from scikit-learn, let’s build a pipeline that includes TfidfVectorizer() as the preprocessing step and RandomForestClassifier as the model for predicting the class of a newsgroup.

After training the model, we’ll serialize it into a Python object using Joblib. This serialized model pipeline will then be ready for use in the API.

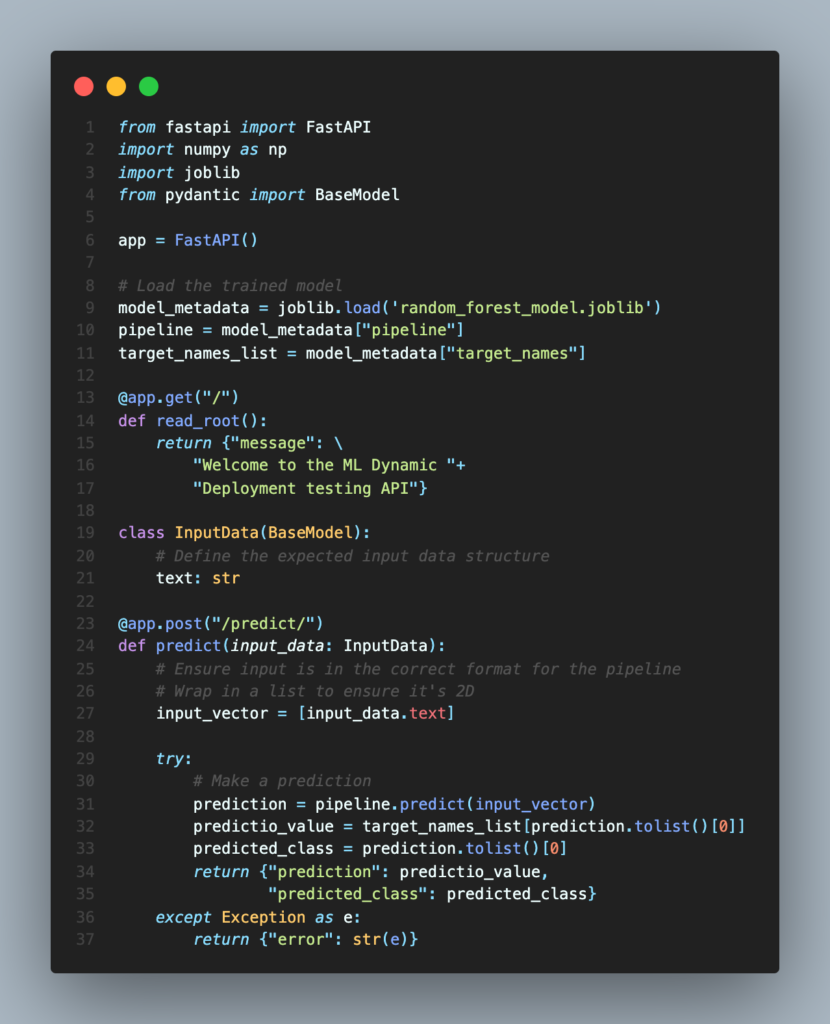

Step 2. Online serving using FastAPI

As the second step of our dynamic deploying, let’s create the API using FastAPI.

FastAPI is a web framework for building APIs with Python. It is designed to make the application development quick and simple which allows us to create in a few of lines two important routes of our interface with the model:

- @app.get(“/”): responsible for showing that our API is online and ready to be consumed.

- @app.post(“/predict/”): route to send the input data and fit the model pipeline and receive the predictions.

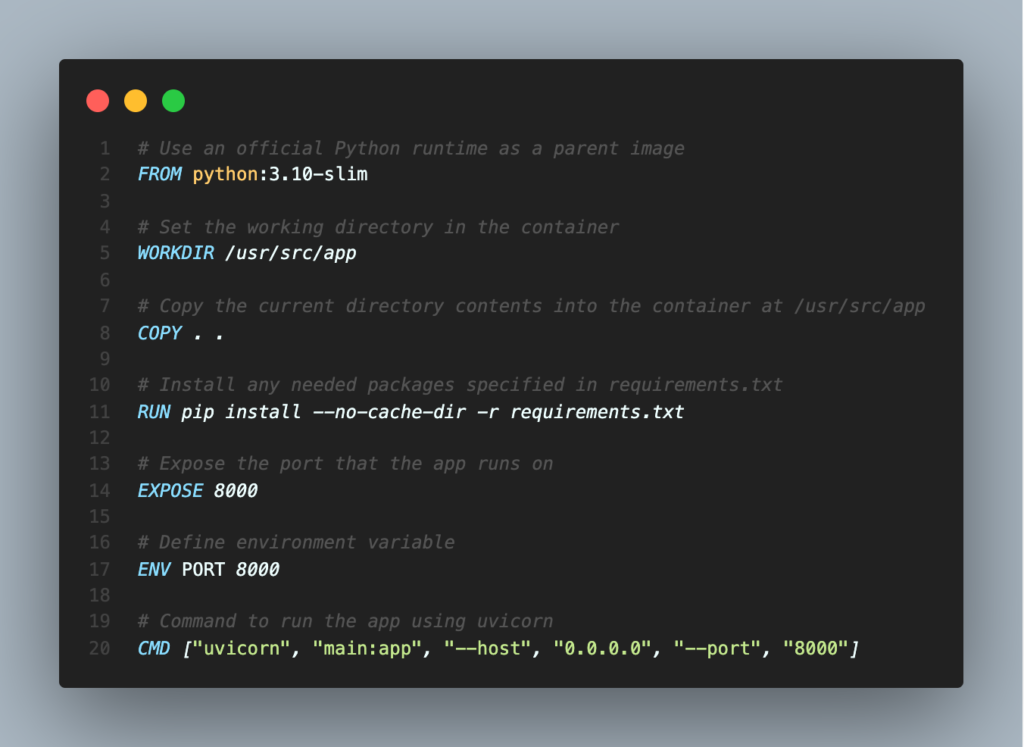

Step 3. Creating a Docker image (Dockerfile)

To ensure our deployment is robust, consistent across environments, scalable, and reproducible, the next step is to use a Docker container to isolate our machine learning system and API.

In the Dockerfile below, we’ll define the python:3.10-slim image as the base image, set up the working directory in the container, install the necessary dependencies, specify the port for external connections, and define the command to start the Uvicorn server when the container runs.

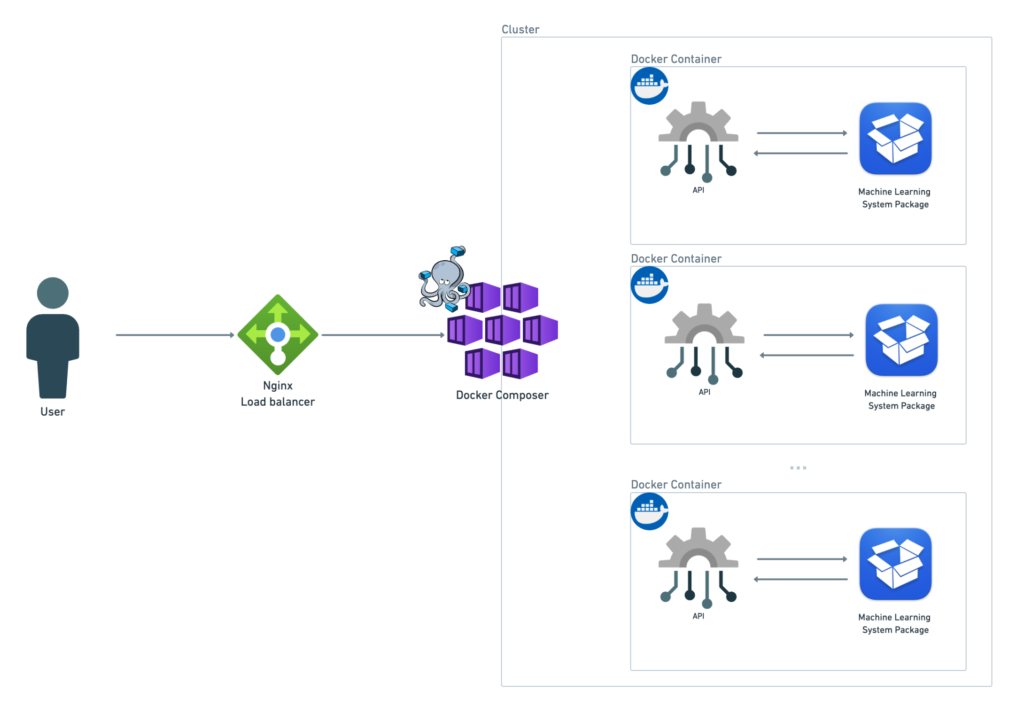

Step 4. Creating a multi-containerized application

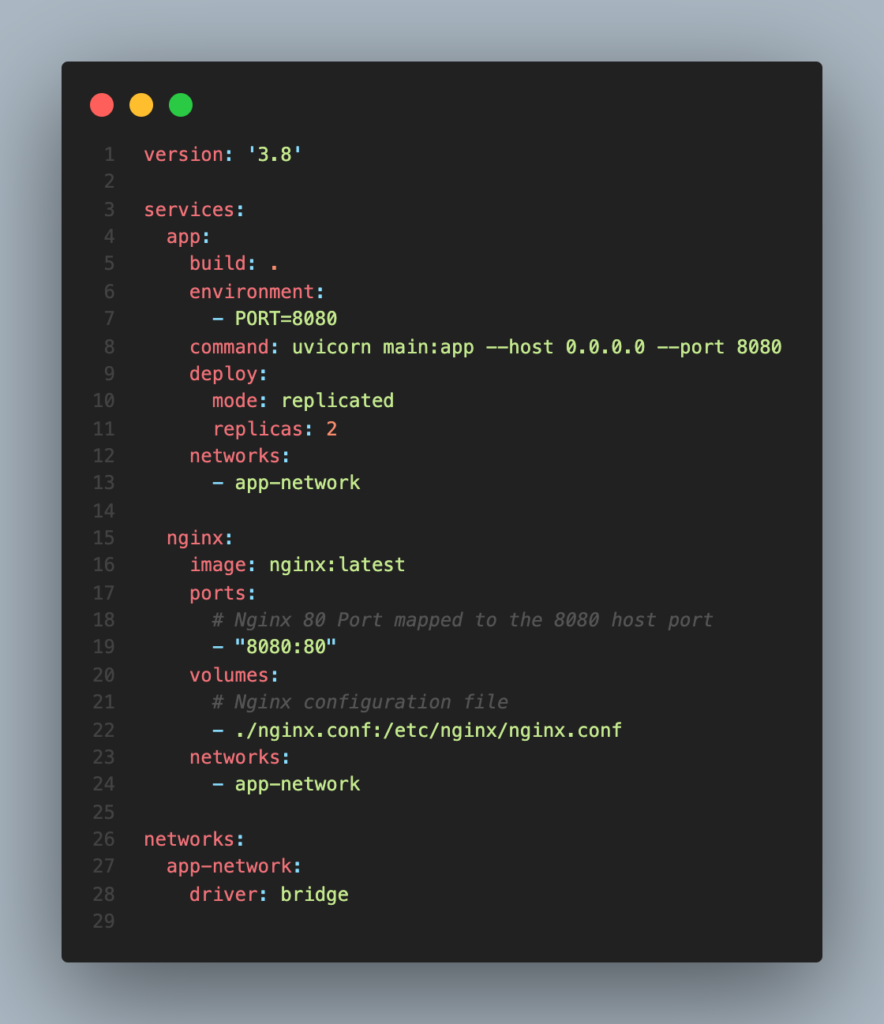

Docker Compose is a tool for defining and running multi-container Docker applications using a simple YAML file. This tool allows us to configure services, networks, and volumes, making it easier to manage a scalable, dynamic deployment.

In this tutorial, we’ll use two containers to demonstrate how to create a multi-containerized application.

The first service is app, where we define the parameters for our application container.

The second service is nginx, which we’ll configure in the next step as our load balancer.

When we define the nginx service like that in our docker-compose.yml, it means Nginx is running inside its own separate container. So, in total, we will have three containers running:

- Two machine learning system containers: Since we configured the

appservice withreplicas: 2, Docker Compose will create two instances of our app, each running in its own container. - One nginx container: The

nginxservice will run in its own container as well, separate from the app containers.

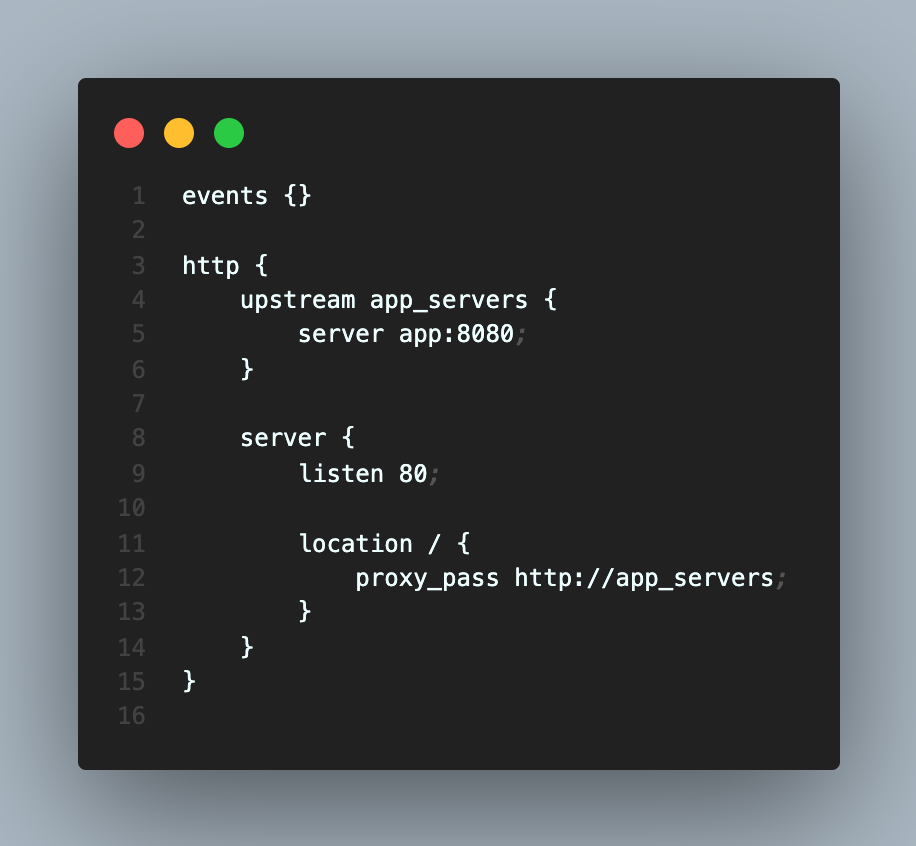

Step 5. Adding a load balancer

To further enhance this dynamic deployment, we’ll add a load balancer to distribute traffic between the two containers defined in docker-compose.yml.

Nginx (short for “engine X”) is a powerful tool for managing and directing internet traffic. It can function in two main ways:

- As a web server, delivering websites to users.

- As a load balancer, distributing incoming traffic across multiple containers or servers.

In the context of our containerized deployment, using Nginx as a load balancer is akin to having a host at a busy restaurant. When customers (web requests) arrive, the host (Nginx) distributes them evenly among different chefs (containers) in the kitchen. This ensures no single chef is overwhelmed, allowing all customers to receive their meals (responses) quickly and efficiently.

Next, let’s review the content of the nginx.conf file:

Step 6. Connecting the dots

The last step is connecting all the pieces we built, resulting in our dynamic deployment in a container with a load balancer and able to scale as a multi-containerized application.

To test our system is only needed to run the both commands, the first one aim to start and build our two containers defined in docker-compose.yml . We`ll use --build option to force Docker to rebuild the images before starting the containers, even if the images already exist:

docker-compose up --build

With the containers running, we’ll test our system by sending a POST request to the local web server. The Nginx load balancer, running on port 8080 of the host, will forward the requests to one of the two application containers running on port 8080 within the Docker network.

curl -X POST "http://localhost:8080/predict/" -# "Content-Type: application/json" -d '{"text": "Incredible Prices! I have a list of products to sell. Im moving to other city and I want to sell everything. "}'

Let’s check it out how it looks like:

Conclusion

In this post, we explored the practical aspects of dynamic deployment by creating a multi-containerized application using Docker, FastAPI, and Nginx.

We trained a simple text classifier and deployed it in a scalable environment. By integrating all the components, we demonstrated how to efficiently serve predictions online with load balancing, providing a solid foundation for deploying more complex models in the future.

Stay tuned for the final post in our deployment series. Share your thoughts, comments, and feedback below on what you find most challenging about dynamic deployment.

References

- 20 Newsgroups Text Dataset – scikit-learn. Retrieved from: scikit-learn

- Nginx Documentation – Nginx. Retrieved from: nginx.org

- Docker Compose Features and Uses – Docker. Retrieved from: Docker Compose Documentation

![Model deployment patterns: A Hands-On Guide to Dynamic Deployment on Google Kubernetes Engine [4/4]](https://rodolfoteles.com.br/wp-content/uploads/2024/12/DALL·E-2024-12-10-18.56.22-A-vibrant-and-exciting-minimalist-representation-of-orchestration-using-Kubernetes-filled-with-various-shades-of-blue.-The-design-should-emphasize-en-768x768.webp)

![Model deployment patterns: Dynamic deployment [2/4]](https://rodolfoteles.com.br/wp-content/uploads/2024/07/867b5dad-d9cd-4036-86b4-7dadb3ced01b-768x768.jpeg)

![Model deployment patterns: Static deployment [1/4]](https://rodolfoteles.com.br/wp-content/uploads/2024/07/Static_Deployment-768x768.jpeg)

One Comment