Model deployment patterns: A Hands-On Guide to Dynamic Deployment on Google Kubernetes Engine [4/4]

As we conclude our series on model deployment patterns, this final post explores one of the most widely used approaches: dynamic deployment on Google Kubernetes Engine (GKE).

Building on the simplicity of static deployment and the versatility of dynamic deployment across platforms, this post takes a hands-on approach. You’ll implement dynamic deployment step by step using GKE, a powerful platform for container orchestration.

We’ll guide you through deploying a machine learning model within a dynamic, containerized setup. You’ll learn to create Kubernetes pods, configure load balancing, and manage deployments to ensure your application is both scalable and highly available.

Ready to take your deployment skills to the next level with dynamic deployment on GKE? Let’s code!

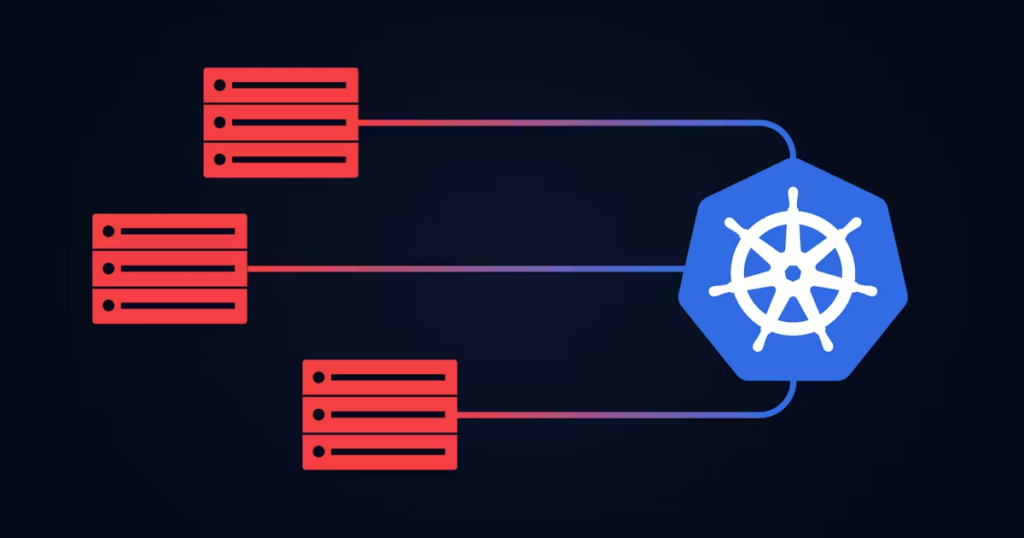

1. Kubernetes: The Orchestrator of Containers

Machine learning applications are inherently complex, with numerous dependencies that are often difficult to track. A small change in a single parameter can ripple through the system, impacting its performance and reliability. That’s where Kubernetes comes in—it’s designed to help maintain reproducibility and stability in such intricate environments.

Much like a maestro conducting an orchestra, Kubernetes orchestrates containers (our musicians) to deliver an efficient and harmonious performance—your machine learning application.

Kubernetes (K8s) has become one of the most popular tools for deploying models to production. Why? It automates the deployment, scaling, and management of containerized applications [1]. While we’ll dive into its components and finer details in future posts, a basic understanding of its purpose is sufficient for now.

That said, managing a Kubernetes cluster comes with its own set of challenges, including:

1.1. Expertise gap

Successfully operating Kubernetes demands a specific skill set and deep knowledge, which many organizations may not possess. This can pose a significant challenge, especially for ML teams that prefer to focus their efforts on model development rather than infrastructure management. Bridging this gap often requires dedicated training or hiring specialized talent, adding complexity and costs to the deployment process.

1.2. Complexity of setup and management

Even with the right expertise, setting up and managing a Kubernetes cluster for ML workloads can still be a complex and time-consuming task. Each step, from configuring nodes to managing storage and networking, requires careful attention to detail. For many teams, this can feel overwhelming, especially when juggling multiple responsibilities or aiming to quickly scale their ML models. The setup complexity can slow down progress and divert focus from the core objectives of model development.

1.3. Integration with ML cloud services

Building an AI solution requires numerous components to work together—storage, logging, data processing tools, monitoring, and more. Self-managed Kubernetes clusters often lack seamless integration with these critical services, which are typically hosted on cloud platforms like Google Cloud Platform (GCP). This can create friction when trying to leverage cloud-native tools designed for machine learning.

To address these challenges and simplify the integration process, Google created Google Kubernetes Engine (GKE). GKE helps bridge the gap between Kubernetes and essential cloud services, offering a more streamlined and efficient solution for managing ML workloads.

2. What is Google Kubernetes Engine (GKE)?

An easier way to use Kubernetes is through managed services like Google Kubernetes Engine.

GKE is a Google-managed implementation of Kubernetes that allows us to deploy and operate containerized applications at scale using Google’s infrastructure [2]. It’s particularly well-suited for deploying machine learning applications quickly and reliably, as it provides the power of Kubernetes while handling many of the underlying components, such as the control plane and nodes, for us [2].

Some of the key benefits of Google Kubernetes Engine [2] include:

2.1. Platform management

With GKE’s Autopilot mode, we can quickly deploy clusters with best-practice configurations automatically applied. Additionally, it offers flexible maintenance windows and exclusions, allowing us to customize the upgrade type and scope to align with business needs and architectural constraints. This streamlined approach helps ensure smooth operations while maintaining control over the deployment process.

2.2. Improved security posture

GKE comes with built-in security features, including integrations with Google Cloud Logging and Monitoring through Google Cloud Observability. It also provides integrated security posture monitoring tools, such as the security posture dashboard, helping teams track and manage security risks effectively.

2.3. Cost optimization

One of the key benefits of GKE is that we only pay for the compute resources requested by the running Pods in Autopilot mode. Moreover, costs can be reduced by running fault-tolerant workloads, such as batch jobs, that scale efficiently.

By having Google manage both the nodes and the control plane in Autopilot mode, we can significantly reduce operational overhead and focus more on our application.

2.4. Reliability and availability

Google Kubernetes Engine provides a highly available control plane and worker nodes in Autopilot mode, as well as in regional Standard clusters. It also offers pod-level SLAs in Autopilot clusters, along with proactive monitoring and recommendations to help mitigate potential disruptions caused by upcoming deprecations.

Now that we’ve covered the basics of Kubernetes and GKE, let’s dive into a step-by-step guide on deploying a machine learning application to GKE.

3. Deploying the machine learning app to GKE

To demonstrate how easy it is to deploy an application on Kubernetes using GCP, let’s deploy a machine learning app to Google Kubernetes Engine. All the steps in this guide were adapted from Deploy an app to a GKE cluster.

3.1. Prepare the Machine Learning Web App

TTo focus solely on the deployment steps and code, we’ll use the same Docker image and machine learning application from the Model deployment patterns: hands-on dynamic deployment using multi-containers [3/4] .

Creating the deployable model – random_forest_model.joblib

from sklearn.datasets import fetch_20newsgroups

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.ensemble import RandomForestClassifier

from sklearn.pipeline import Pipeline

from sklearn.model_selection import train_test_split

import joblib

# Load the data

newsgroups = fetch_20newsgroups(

subset='train',

remove=('headers', 'footers', 'quotes')

)

X, y = newsgroups.data, newsgroups.target

target_names_list = newsgroups.target_names

# Split the data into training and validation sets

X_train, X_val, y_train, y_val = train_test_split(

X, y,

test_size=0.2,

random_state=42

)

# Create the pipeline

pipeline = Pipeline([

('tfidf', TfidfVectorizer(stop_words='english')),

('clf', RandomForestClassifier(

n_estimators=100,

random_state=42

))

])

# Train the model

pipeline.fit(X_train, y_train)

# Assuming pipeline is your model

model_metadata = {

"pipeline": pipeline,

"target_names": newsgroups.target_names

}

# Save the model

joblib.dump(model_metadata, 'random_forest_model.joblib')

print("Model saved as 'random_forest_model.joblib'")FastApi – main.py

from fastapi import FastAPI

import numpy as np

import joblib

from pydantic import BaseModel

app = FastAPI()

# Load the trained model

model_metadata = joblib.load('random_forest_model.joblib')

pipeline = model_metadata["pipeline"]

target_names_list = model_metadata["target_names"]

@app.get("/")

def read_root():

return {"message": \

"Welcome to the ML Dynamic "+

"Deployment testing API"}

class InputData(BaseModel):

# Define the expected input data structure

text: str

@app.post("/predict/")

def predict(input_data: InputData):

# Ensure input is in the correct format for the pipeline

# Wrap in a list to ensure it's 2D

input_vector = [input_data.text]

try:

# Make a prediction

prediction = pipeline.predict(input_vector)

predictio_value = target_names_list[prediction.tolist()[0]]

predicted_class = prediction.tolist()[0]

return {"prediction": predictio_value,

"predicted_class": predicted_class}

except Exception as e:

return {"error": str(e)}Dockerfile

# Use an official Python runtime as a parent image

FROM python:3.10-slim

# Set the working directory in the container

WORKDIR /usr/src/app

# Copy the current directory contents into the container at /usr/src/app

COPY . .

# Install any needed packages specified in requirements.txt

RUN pip install --no-cache-dir -r requirements.txt

# Expose the port that the app runs on

EXPOSE 8000

# Define environment variable

ENV PORT 8000

# Command to run the app using uvicorn

CMD ["uvicorn", "main:app", "--host", "0.0.0.0", "--port", "8000"]3.2. Prepare the GCP environment

To set up your environment for configuring GKE, follow these steps:

- Create a new project in Google Cloud Platform (GCP).

- Ensure billing is enabled for your project (if you don’t have GCP credits, the cost for this quickstart tutorial will be under $0.50).

- Enable the Artifact Registry and Google Kubernetes Engine APIs.

- Finally, click on the Cloud Shell activation button:

3.3. Create a GKE cluster

To start the deployment, let’s first create a Kubernetes cluster that includes a control plane to manage the cluster and worker nodes where your applications will run.

The worker nodes are Compute Engine VMs that handle the necessary Kubernetes processes. Applications are deployed to the cluster, and the nodes execute them.

To begin, create an Autopilot cluster named “ml-cluster” using the following command:

gcloud container clusters create-auto ml-cluster --location=us-central13.4. Authenticating to the cluster

After creating the cluster, it’s essential to authenticate in order to interact with it. To do this, use the following command:

gcloud container clusters get-credentials ml-cluster --location us-central13.5. Deploying the ML application

Once we have a cluster and are authenticated, allowing communication, we can proceed to deploy our machine learning-powered web application, as described in section 3.1.

GKE (Google Kubernetes Engine) relies on “Kubernetes objects” to configure and manage the components of your application cluster. One of these objects is called a Deployment, which is used for running applications that don’t store data on the server, such as web servers.

The Deployment in Kubernetes functions as a controller, managing the lifecycle of applications. It ensures that your application runs smoothly and remains healthy, even if some components fail.

Additionally, a Service is used to make your application accessible to users on the internet. It defines rules for routing traffic and load balancing, ensuring traffic is distributed evenly.

3.5.1. Create the deployment

To run the ml-app in our cluster, we need to deploy it with the following command. This will create and manage a Deployment named ml-server, which will run the ml-app:1.0 container image:

kubectl create deployment ml-server \

--image=us-docker.pkg.dev/google-samples/containers/gke/ml-app:1.0This command sets up a Deployment named ml-server. A Deployment ensures that a defined number of replicas (copies) of the application are consistently running.

The flag --image=us-docker.pkg.dev/google-samples/containers/gke/ml-app:1.0 specifies the container image to be used.

3.5.2. Expose the deployment

After deploying the application to GKE, the next step is to make it accessible over the internet for users. This can be achieved by creating a Service, a Kubernetes resource designed to expose your application to external traffic.

Run the following command to create the Service:

kubectl expose deployment ml-server \

--type LoadBalancer \

--port 80 \

--target-port 8080This command directs Kubernetes to expose the ml-server Deployment by creating a Service. The Service is configured as a LoadBalancer, which enables external access to the application from outside the Kubernetes cluster, such as via the internet or an external network.

- Service Type (

LoadBalancer): Specifies that the application should be accessible externally through a load balancer. - External Port (

80): Sets the port that users will use to access the application. Port 80 is standard for HTTP traffic. - Target Port (

8080): Specifies the internal port on which the application inside the container listens. The Service maps traffic from the external port (80) to this internal port (8080).

3.5.3. Inspect and view the application

- To verify that a single

ml-serverPod is running within the cluster, you can inspect the active Pods using the following command:

kubectl get pods2. To check the details of the ml-server Service, use the following command:

kubectl get service ml-server3. To view your application in a web browser, simply navigate to:

http://YOUR_EXTERNAL_IP_ADDRESS_WITH_EXPOSED_PORTReplace YOUR_EXTERNAL_IP_ADDRESS_WITH_EXPOSED_PORT with the external IP address and port displayed in the output of the kubectl get service ml-server command. This URL will allow you to access the running application.

For example, if the external IP address and port are 127.1.25.2 and 8080, you would use:

http://127.1.25.2:8080This will direct you to your running application in the browser.

3.6. Avoiding unexpected costs (clean up)

To prevent unexpected charges at the end of the month, it’s a good practice to delete any services in your project that are no longer in use.

3.6.1. Remove the application’s service

To stop exposing the application and avoid unnecessary charges, delete the application’s Service by running:

kubectl delete service ml-server3.6.2. Delete the Kubernetes cluster

Once you’re done, it’s important to delete the cluster itself to free up resources. Use the following command to delete the ml-cluster:

gcloud container clusters delete ml-cluster --location us-central1By completing these steps, you’ll ensure that no unused resources remain active, keeping your project cost-efficient and well-managed.

Conclusion

Throughout this series on model deployment patterns, we’ve covered the practical steps and key concepts behind getting machine learning models into production. Starting with static deployment, we saw how its straightforward approach works well in controlled environments. Then, we moved on to the versatility of dynamic deployment, highlighting how it adapts to more complex needs. Finally, we wrapped up with a hands-on example of using Google Kubernetes Engine (GKE) to simplify dynamic deployment and make it more accessible.

In this last post, we walked through deploying a machine learning application to GKE, showing how managed services can reduce the effort involved while still offering the reliability and scalability needed for production. With tools like GKE, managing Kubernetes becomes less about the complexity and more about building solutions that work.

As we finish this series, it’s worth remembering that deployment is where the real value of our work comes through. Models aren’t just code or pipelines; they’re tools meant to solve real problems. By focusing on deployment, we can bridge the gap between research and impact.

For those navigating this journey, take it step by step. Learn the patterns, experiment with the tools, and build the skills that make deployment feel less intimidating. Whether it’s static setups or fully managed platforms, there’s always a way to move forward. Keep exploring, and keep building—your work makes a difference.

References

- Kubernetes oficial page. https://kubernetes.io/

- GKE Overview. https://cloud.google.com/kubernetes-engine/docs/concepts/kubernetes-engine-overview

- Quickstart with GKE. https://cloud.google.com/kubernetes-engine/docs/deploy-app-cluster

![Model deployment patterns: hands-on dynamic deployment using multi-containers [3/4]](https://rodolfoteles.com.br/wp-content/uploads/2024/08/b2643f06-8d28-4a10-8ac6-ce3b1406d17f-768x768.jpeg)

![Model deployment patterns: Static deployment [1/4]](https://rodolfoteles.com.br/wp-content/uploads/2024/07/Static_Deployment-768x768.jpeg)

![Model deployment patterns: Dynamic deployment [2/4]](https://rodolfoteles.com.br/wp-content/uploads/2024/07/867b5dad-d9cd-4036-86b4-7dadb3ced01b-768x768.jpeg)